So you think you want to write a deterministic hypervisor?

If you’ve read our launch post, you might remember this section:

We thought about this and decided to just go all out and write a hypervisor which emulates a deterministic computer. Consequently, we can force anything inside it to be deterministic.

Let’s expand on that a bit.

When Antithesis discovers a potential bug, we want to be able to reproduce it easily, explore it thoroughly, and provide our users with the information they most need to fix it. The deterministic hypervisor is the back-end component that gives us these capabilities.

But what is a deterministic hypervisor exactly? What’s special about it? How does it work? What are we doing with it, and, consequently, what can we do for you?

This is a high-level overview of a portion of the Antithesis platform. Nothing in this post is required to make use of Antithesis, but I’m hoping it demystifies our product a little bit.

If you prefer video content, check out the recording of my talk at the FreeBSD Developer Summit:

Defining determinism

Wikipedia’s definition of a deterministic algorithm is pretty solid for our purposes:

In computer science, a deterministic algorithm is an algorithm that, given a particular input, will always produce the same output, with the underlying machine always passing through the same sequence of states.

That last clause is important: it’s not just the result that is the same, but any underlying state as well — even hidden behavior that only manifests as obscure side-effects. When we have this sort of determinism, we can chain together several deterministic operations, or even trillions of them, and still have a consistent and knowable result.

In the world of software testing, determinism helps us attain reproducibility. Many software products have truly nasty bugs that linger for years primarily because they don’t show up consistently; this adds a ton of friction for engineers at every stage. These bugs tend to languish as open tickets because engineers cannot even begin to fix the code until they first scout out the bug and identify when and why it happens. What are the costs of never knowing if a bug really exists, and never knowing if you’ve truly fixed it? You’re always second-guessing yourself, always rerunning experiments over and over, always dealing in “maybes” instead of a clear “yes” or “no.”

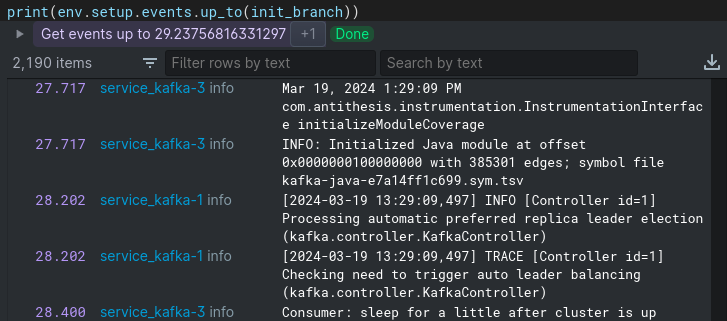

For the Antithesis platform, deterministic reproducibility allows us to generate useful artifacts of the bugs we’ve found, and it’s also a key enabler of time-travel debugging, and of the state-space exploration we use to find bugs in the first place.

My favorite benefit of reproducibility is that it allows destructive analysis of a buggy system state: I can freely extract before-and-after crash dumps, modify program data to see how it changes the behavior, and generally perturb the system as much as I want without fear. I know I’ll always be able to try something different if my first attempt doesn’t bear fruit. Whereas with flaky, hard-to-reproduce bugs in the wild, there’s always this moment of terror when you’re attempting any kind of destructive analysis – because you know it’ll be a huge setback if you don’t learn something valuable on your first try.

Determinism in Antithesis

Most of software engineering practice focuses on reproducibility in small tests, such as unit tests. Most likely because this feels more achievable than forcing any kind of determinism on big systems with millions of interconnected moving parts. But at Antithesis, most of our tools are aimed at big picture end-to-end testing. We want to subject a full-featured deployment of your software to “thousands and thousands of crazy situations you’d never dreamed of,” in a simulated environment that captures a lot of the complexity and unpredictability of the real world without burying you in useless noise.

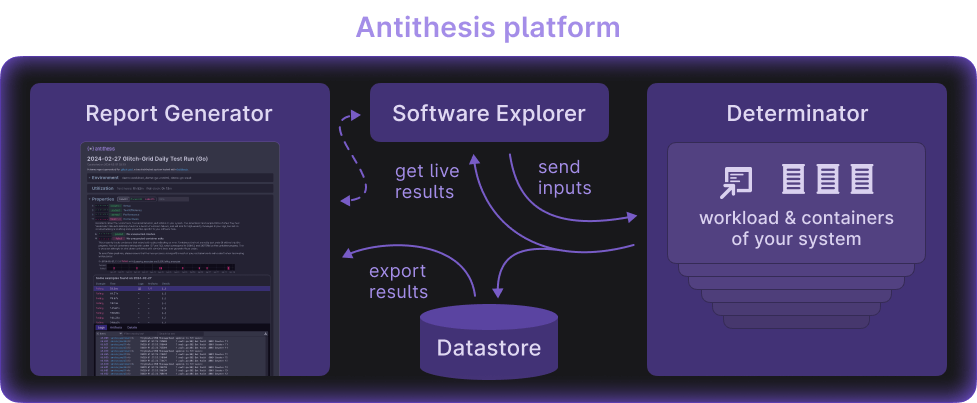

A key piece of the Antithesis platform is the deterministic hypervisor that actually runs the test workload.1 We call this thing “the Determinator.”

The Antithesis environment simulates one or more computers using a collection of containers, all running within a single virtual machine managed by our hypervisor. The unit of reproducibility is the state of the entire system/experiment/workload as an interconnected whole, not any single process or server within the system. This approach reduces the need for complicated, domain-specific mocks and test harnesses, since you can just run (for example) both your client and server software together in the same bubble of determinism and perfectly reproduce their synchronized state.

Our deterministic hypervisor’s job is to provide a big box for the whole end-to-end experimental setup to sit in. All of the customer’s software runs inside the box, mediated by simulation and fault-injection harnesses provided by Antithesis. Outside the box are a collection of tools that manage the exploration of the system under test. Everything in the hypervisor’s guest environment sees a single linear history. Outside of it, Antithesis can see every execution path ever visited – a tree of possible paths the experiment could take. With this knowledge, we can choose new experimental inputs or revisit previously seen ones for further analysis. The Determinator’s job is to enforce determinism on everything within the box and at the boundary.

Building the hypervisor

I was the first person to work on the Determinator, alongside our CTO, Dave Scherer.

We started with a piece of existing, mature open-source software – the FreeBSD project’s bhyve hypervisor. Bhyve is, of course, not a deterministic hypervisor – it’s a general-purpose hypervisor designed to run a variety of operating systems on real hardware, aiming for both low overhead and broad access to hardware features like the GPU. To build our deterministic fork of bhyve, we started by removing a lot of standard hypervisor functionality.

Why’s that? Well, the computers on your desk, in your pocket, and in the cloud weren’t really designed for end-to-end determinism. The real world is full of entropy, and standard computing hardware doesn’t hide all of it from you.2 So we tried to limit what exactly our hypervisor had to do, to start with a small foundation of deterministic behavior and then build outward incrementally.

A lot of the day-to-day work of building the Determinator involved categorizing the micro-level CPU behavior we encountered:

- Deterministic behavior: Anything with consistent results and manageable side-effects. We want to keep the handling of deterministic behavior maximally within the hypervisor guest, because context-switching reduces performance; sometimes we’ll still have to exit to use some resource provided by the host OS or a software-emulated device, of course.

- Nondeterministic behavior: Any machine behavior with inconsistent results or visibly inconsistent side-effects, including any kind of subtle butterfly effect that later permutes machine state. Another way to think of this is “determinism-destroying” behavior, so we must perfectly avoid it, contain it, or reverse it when it happens.

Modern CPUs are fiendishly complex; they have many elaborate optimizations and nearly half the history of computing baked into various modal settings and feature flags. Assumptions are dangerous here. The only way to know whether any CPU behavior is deterministic is to subject your whole system to very thorough tests.

A deterministic view of time

The biggest core constraint was clear long before we started writing anything, but it’s also the most difficult to work around. It’s the thing that haunts pretty much anyone who’s ever attempted to write a deterministic execution environment: time.

Even simple-seeming CPU operations with a well-defined result aren’t really deterministic when you include the question, “How much time did this take?” Modern CPUs incorporate a collection of pipelines and caches, which are all optimized for throughput and speed at the expense of a little bit of predictability. Zoom out to entire programs in the real world and their timing characteristics are greatly affected by multi-core concurrency, interrupts, the operating system’s process and i/o scheduler,3 and other extrinsic variables like network latency. Time nondeterminism can easily translate into data nondeterminism through a variety of means like racing transactions, interleaved event delivery, or simply reading the system clock.

In order to solve this problem, we looked for ways to impose time determinism: a requirement that the guest’s simulated clock values should be a function of only the deterministic state and execution history of the guest system. This starts with hiding all real-world timing information from software running inside the Antithesis testing environment. Every attempt to access a time source from inside the guest – reading TSC, reading HPET, etc. – returns a virtual time value computed by the hypervisor. The hard part is translating “deterministic state and execution history” into a real, measurable quantity. Like everyone else who’s attempted to write a deterministic hypervisor, we quickly ran into the limitations of the tools available for measurement. Here’s an example:

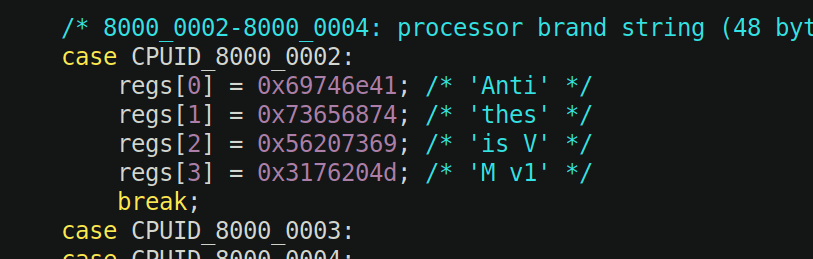

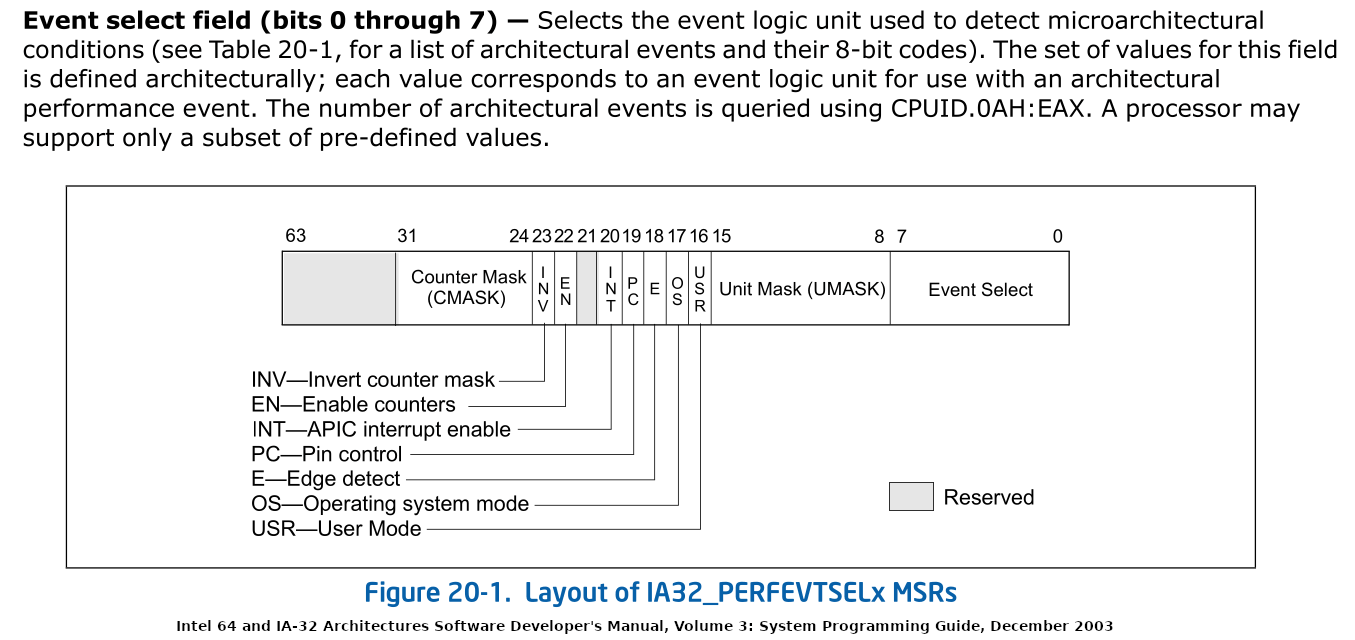

It feels really natural to peg simulated cycle time to instruction processing, since that transparently guarantees that time progresses in proportion to the work done by the CPU. The current version of the Determinator is designed to run on modern Intel server CPUs, using Intel’s VMX (Virtual Machine Extension) features for hardware-supported virtualization. These chips also provide the Performance Monitoring Counters, which can be programmed to collect stats about the behavior of the CPU. Like many researchers and hobbyists before us, we were drawn to the possibility of using instructions-retired count to drive the simulated clock. However, we had to invent workarounds for two very notable limitations:

- The PMC instructions retired count isn’t quite deterministic, even in its special “precision” mode. Based on our testing, about one in a trillion instructions would be miscounted due to some unknown quirk of the CPU (we speculate it’s something to do with pipelining, branch prediction, or external interrupt handling).

- PMC supports a threshold notification system, where you set up the counter as a “countdown” and get an interrupt when the value reaches zero. However, since this interrupt is delivered through the APIC, dozens of instructions will be processed before the CPU is actually notified of the threshold event. Moreover, this interrupt delivery overhead is quite varied when measured in CPU cycles, meaning that you really don’t know how long ago an interrupt was triggered from the time you receive it.

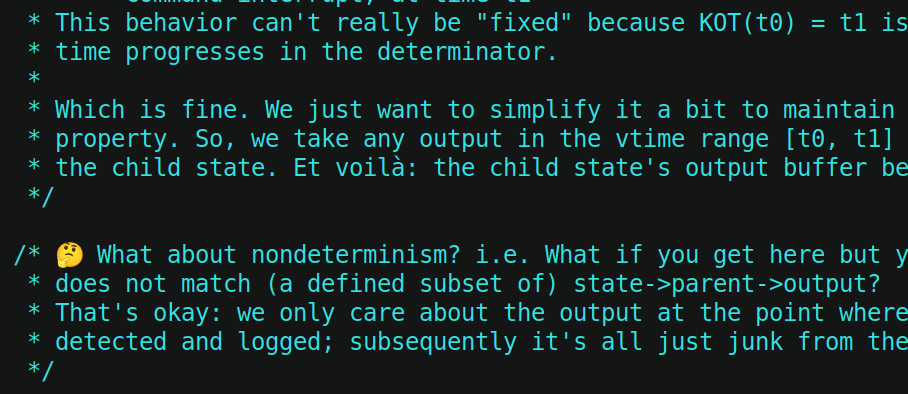

Solving problems like this involved a lot of iteration and experimentation. We were pushing various features of the CPU just a bit past what they were really designed for, which meant carefully probing their real, undocumented functionality every step of the way. Some of the most important code written during the early development of the Determinator was an alternative kernel logging subsystem. Our starting point for determinism testing was rabidly hyperactive logging, which taught us that FreeBSD’s standard kernel log could drop messages when slammed with massive amounts of data. So we had to write our own module-specific logger that both maintained large buffers of data and could pause the VM to flush that data proactively before it ran out of room. Only once we were reliably capturing and analyzing like 50 GiB of output per 20-minute run could we actually pin down, disentangle, and incrementally solve some subtle non-determinisms.

CPU parallelism

Parallelism, ultimately, is also a time problem. When multiple cores are acting concurrently, those operations can be interleaved in more-or-less arbitrary ways. Getting the final result to be sane and useful is the product of billions of dollars of engineering effort and many thousands of Computer Science PhD theses. We were interested in more than just the final result, however: in order to maintain a consistent machine state, we really wanted step-by-step instruction-level determinism, which is a much more onerous burden than just making sure certain kinds of writes and reads are well-ordered and atomic.

Our plan here was to simply isolate the cores. Each instance of the deterministic hypervisor runs on just one physical CPU core. When a real processor has 48 or 96 cores, we spin up that many separate VMs, each pinned to a different physical core, exploring different parts of the possible program state in parallel. The combined throughput of the system – its ability to explore the state space and perform many simultaneous experiments – is quite high, which matters more to us than the wall-clock speed of any single VM in isolation.4

Guest software running in the Antithesis platform still experiences concurrency similar to a multi-core / multi-machine system, thanks to the process scheduling imposed by the guest OS. Since the Antithesis platform controls the guest’s scheduler, we can also use it as a fault injection mechanism (for example, via thread starvation). Our ability to artificially induce fault conditions that would normally be rare allows Antithesis to efficiently hunt for race conditions or other hard-to-pin-down concurrency bugs.

Deterministic I/O

The other mandatory piece of the Determinator design was a deterministic input/output channel. Connecting a deterministic system to a nondeterministic communication pathway just renders the whole thing nondeterministic. That’s why Antithesis simulates an entire environment within one deterministic VM, with an abstract (and fault-injected) simulation of network communication, data storage, etc. However, if the experimental world inside the Determinator’s “magic box” is purely deterministic, we needed some controlled way to inject variance from the outside world in order to guide it towards different possible system states. We also wanted a simple and performant way to exfiltrate data from the guest environment as well.

We implemented deterministic input/output using the Intel x86 architecture’s VMCALL instruction (known as VMMCALL on AMD). This is basically a roll-your-own-instruction facility for hypervisor writers – an instruction that does nothing but context-switch from guest to host. Expanding its functionality beyond that is mostly a matter of making sure that both guest and host are using the same API. Our custom instruction allows software running inside the guest to emit interesting data (such as log messages) to the rest of the Antithesis platform and ingest commands or RNG seeds that are used to control guest behavior.

The points in execution history where the guest ingests input from the Antithesis platform become possible branch points for future execution. Consequently, the external view of the exploration of a system is an input tree. Later on, we also added interrupt injection to “push” actions into the guest when we wanted to preempt its current activity instead of waiting for the next predetermined i/o exchange point.5

One of many building blocks

That’s almost it for the basics. I’m necessarily leaving out a ton of detail, of course, both for the sake of brevity and competitive edge. I’ve also left out one key design pillar of Antithesis’s deterministic hypervisor: all the functionality that allows efficient state exploration and time-travel debugging. (We are not replaying every single execution path from the beginning – we would not waste your time like that!) It just felt like too much to absorb in one post, so we’ll have to return to it some other time.

Our particular implementation of a deterministic hypervisor is idiosyncratic in places, since it’s designed around the needs of the entire Antithesis platform: throughput over latency, abstractly simulated devices representing entire systems in one VM, deterministic replay both in the moment and from cold storage, time-travel debugging with support for artifact extraction and probability analysis, and more.

The Antithesis testing platform consists of a lot of innovative pieces working together, and we’re really proud of what we’ve built. This discussion of one individual piece doesn’t capture how they all fit together (see our system documentation for more information on that), but we hope it’s been fun and illuminating in its own right.

We have a lot of irons in the fire right now – the Determinator is only a starting point in our scheme to transform software testing and simulation. We’ll tell you a lot more over the coming months and years. But… maybe you want to get in on building this borderline-impossible stuff right now. If that’s you, check our Careers page and get in touch with us.